Improved Precision And Recall Metric For Assessing Generative Models

Concepts

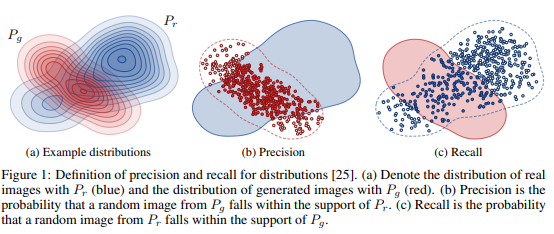

- Precision and Recall

- Assessing Generative Models via Precision and Recall (2018)

- Cannot estimate extrema due to reliance on relative probability densities (e.g. cannot interpret high density regions by mode collapse / truncation correctly)

- Revisiting Classifier Two-Sample Tests (2017)

- Use binary classifier (DNN, or just KNN) to perform two-sample test / evaluate distribution between generated and real data distributions

- Also cannot deal with mode collapse

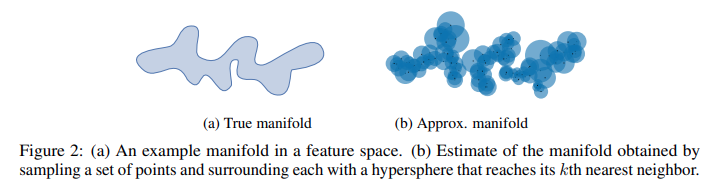

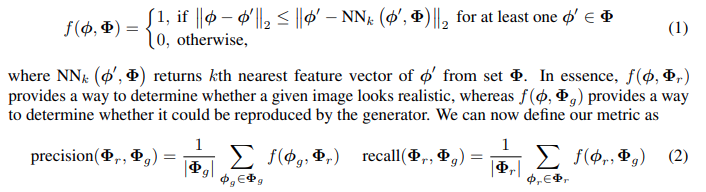

- Approximating Manifold using k nearest neighbours

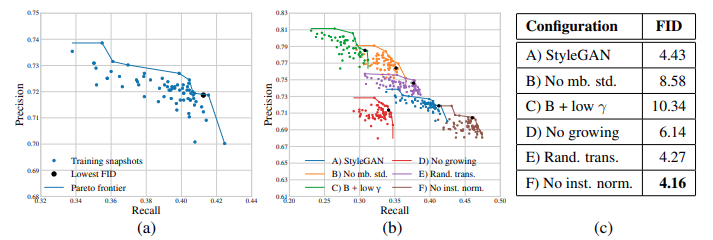

- Pareto frontiers

- Do not make assumptions / explicit preference in multi-objective optimization (precision vs recall) Multiobjective Optimization

- Using snapshots during training

Results

StyleGAN alterations (black dots are lowest FID)

A: original StyleGAN1 B: no minibatch std -> less variation C: also less R1 discriminator regularization -> less variation D: no progressive growing -> hurts FID E: randomly translates output image before passing to discriminator -> better precision F: no instance normalization -> more variety and better FID (lol somehow, but style mixing probably does not work)

A: original StyleGAN1 B: no minibatch std -> less variation C: also less R1 discriminator regularization -> less variation D: no progressive growing -> hurts FID E: randomly translates output image before passing to discriminator -> better precision F: no instance normalization -> more variety and better FID (lol somehow, but style mixing probably does not work)

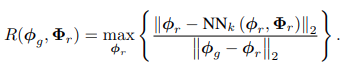

Realism score

truncation performed by only retraining hyperspheres with radii below median to perform over-conservative estimate (pruning prevents having very wrong scores for generated samples that fall in underrepresented samples where radii are overly loose)

truncation performed by only retraining hyperspheres with radii below median to perform over-conservative estimate (pruning prevents having very wrong scores for generated samples that fall in underrepresented samples where radii are overly loose)

R >= 1 means generated sample falls in real distribution

Evaluate Interpolations

Do linear interpolation on StyleGAN latent space and investigate realism score